|

I build microscopes to capture the transient 3D processes in living systems - things that are too fast and subtle to be seen with your naked eye or existing cameras. I received my Ph.D. at Univeristy of California, Los Angeles (UCLA) in 2024, advised by Prof. Liang Gao and Prof. Tzung Hsiai. We messed around light field imaging and machine learning to tackle some of the most difficult microscopy challenges, such as 3D mapping of the flowing blood cells inside beating heart (Nat. Methods, 2021) and neural voltage signals within thinking brain (bioRxiv, 2024). I did my internship at Meta Reality Lab, working on waveguide-based eye tracker and LCoS light engine for augmented reality (AR) glasses. Before that, I worked in Prof. Peng Fei's lab on light sheet fluorescent microscopy as an undergraduate. And before that, I was an amateur surfer, on the internet. |

|

|

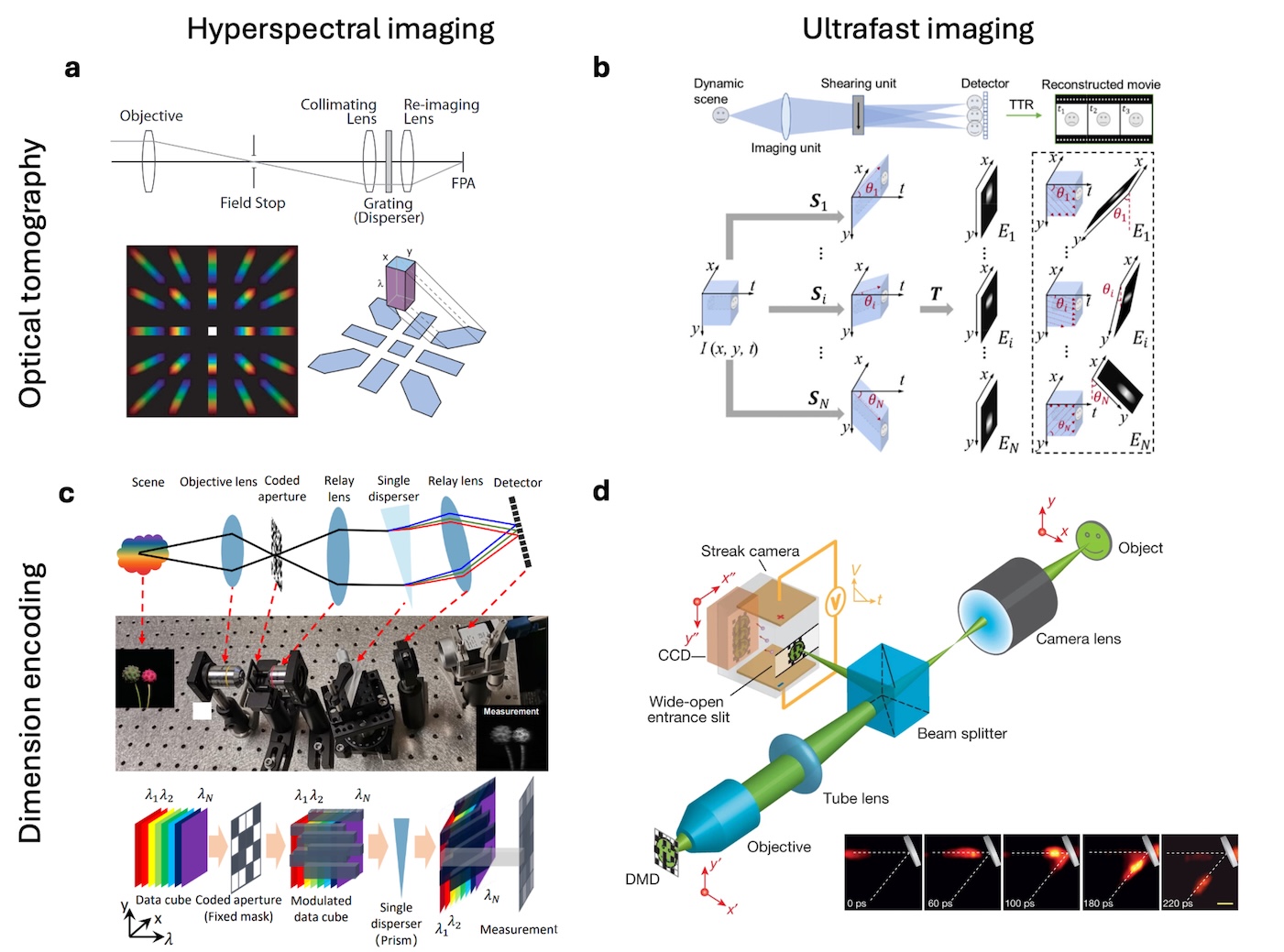

Zhaoqiang Wang, Yifan Peng, Lu Fang, Liang Gao Optica, 2025 The emergence of computational optical imaging has blurred the boundary between imaging hardware and algorithm. We reviewed the vast landscape of this field and highlighted the synergic and complementary roles of optics and computation in modern imaging systems. |

|

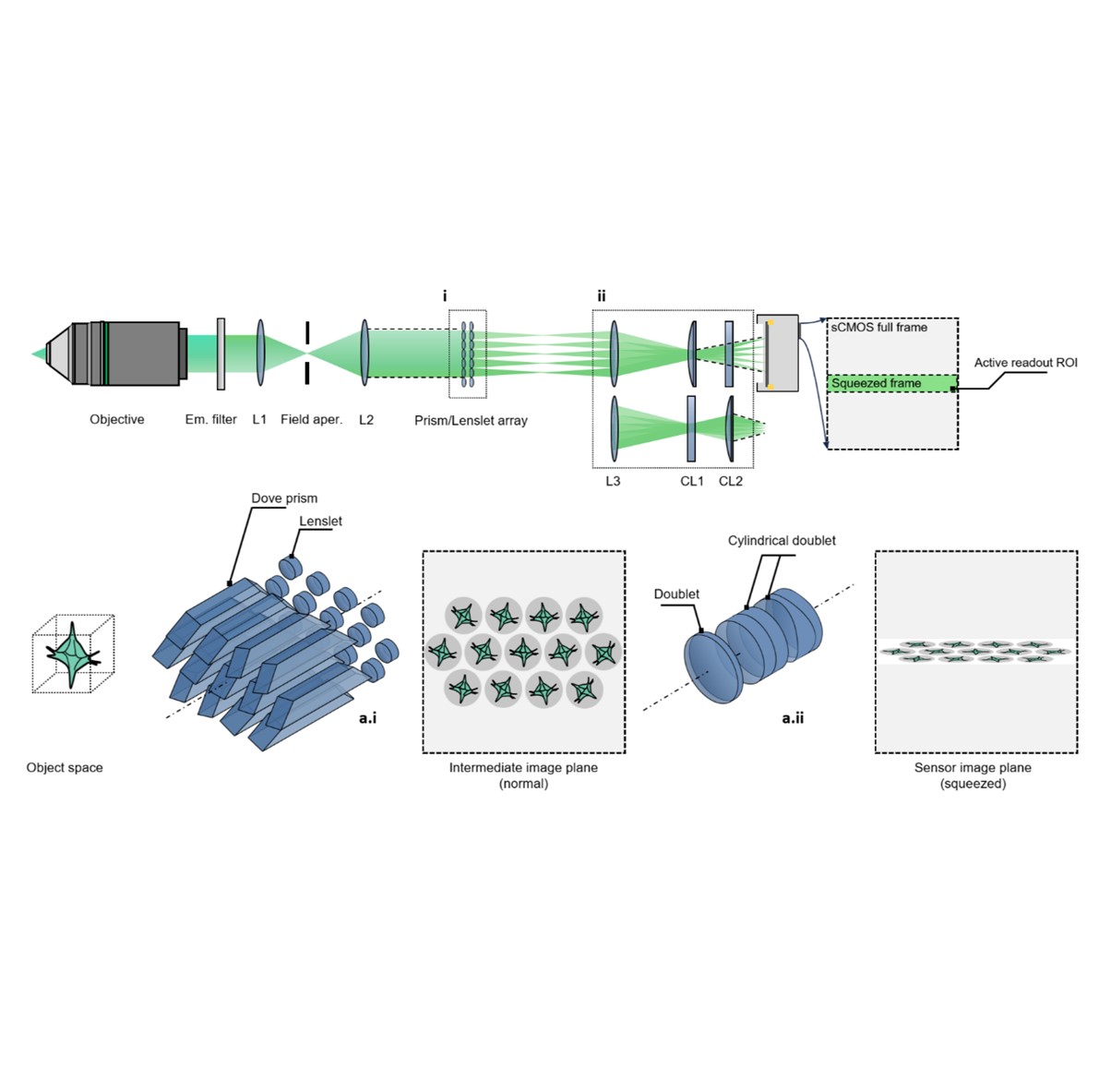

Zhaoqiang Wang, Ruixuan Zhao, Daniel A Wagenaar, Diego Espino, Liron Sheintuch, Ohr Benshlomo, Wenjun Kang, Calvin K. Lee, William C. Schmidt, Aryan Pammar, Enbo Zhu, Jing Wang, Gerard C.L. Wong, Rongguang Liang, Peyman Golshani, Tzung K. Hsiai, Liang Gao bioRxiv, 2024 | Project page | Code SPIE Photonics West 2025 We engineered the light field microscopy with dove prisms and anamorphic lens to exceed kilohertz volumetric frame rate for fluorescence detection. We demonstrated accurate tracking of blood cells in zebrafish larvae and detection of neural voltage spikes in leech ganglion and mouse hippocampus. |

|

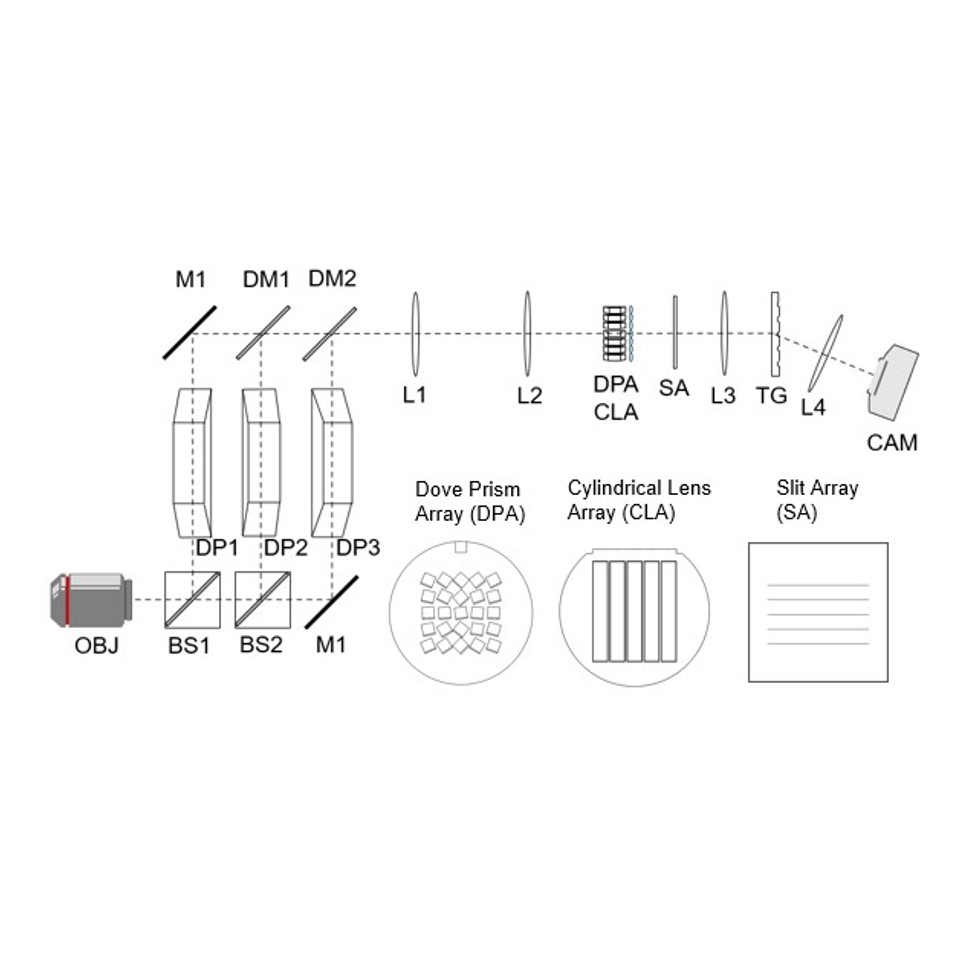

Zhaoqiang Wang, Tzung K. Hsiai, Liang Gao Optica, 2023 We introduced in-plane radon transformation and spectral encoding to the light field photography, which can record sub-aperture images in the form of 1D projections. This allows for extremely compressive measurements of light field, high speed, light dataload using 1D sensors. |

|

Zhaoqiang Wang, Yichen Ding, Sandro Satta, Mehrdad Roustaei, Peng Fei, Tzung K. Hsiai PLOS Computational Biology, 2021 We proposed a pipeline to visualize, process and quantify the biomechanics in a beating embryonic zebrafish heart. |

|

Zhaoqiang Wang, Lanxin Zhu, Hao Zhang, Guo Li, Chengqiang Yi, Yi Li, Yicong Yang, Yichen Ding, Mei Zhen, Shangbang Gao, Tzung K. Hsiai, Peng Fei Nature Methods, 2021 | Code | NM News & Views | UCLA Samueli Newsroom We trained a convolutional neural network to reconstruct 3D image stacks from raw measurements of light field microscope (LFM). It brings higher spatial resolution, less artifacts and higher processing throughput to LFM, without sacrificing the high speed acquisiton capability. |

|

Website template is from Jon Barron ✩ |